Problems with nutrition science and how to spot them

Here at the BTN Academy we promote evidence-based practice. Evidence-based practice doesn’t JUST mean that you read a bunch of research and base your actions on that, though, it’s properly defined as a combination of the best available evidence, your professional expertise and (most importantly in my opinion) your client’s preferences and specific situation.

For example, it may be that we can pinpoint an ideal protein intake for someone, but if the individual really struggles to eat that much we could opt for a lower intake – your client is aware that a higher intake would theoretically be better, but they’re OK with losing a little bit of progress for sake of living an easier life.

But the fact remains that evidence-based practice utilises things found in the research. We utilise the data, the hard evidence, the FACTS. But there are even problems there because, honestly, most nutrition science isn’t actually that good, and in today’s blog I want to outline why and what you can do about it as a conscientious practitioner.

I could go in a number of different directions here, for example, I could speak on the strange case of anti-fat research in the 60’s and 70’s. Briefly, a team of researchers in 2016 unearthed a number of documents indicating a form of conspiracy by the sugar industry to cast doubt over the negative health effects of their product by instead pushing dietary fat into the limelight (1), this is a story that has been received extremely well by popular media but, is in fact significantly more controversial in the scientific community itself (2). Something to keep an eye on for sure, but something that would be hard for you or I to really spot for ourselves.

I could also look at the fact that for most of its history nutrition research has barked up the wrong tree. For decades researchers have fallen into the trap of nutritionism – the belief that nutrition can be described by looking at the constituent parts of foods in isolation. This has meant that research has looked into saturated fat, cholesterol, sugar, carbohydrate, and so on, rather than the foods themselves. Recent food-first research has turned this on its head, however, highlighting a number of historic misconceptions. For example while early research found a link between saturated fat and heart disease, leading to recommendations for eating low fat dairy, later research has found that when said saturated fat is eaten within the food matrix of a dairy food such as milk, cheese or yoghurt, the association, if it exists at all may go the other way with disease risk being reduced (10). This is something that is now changing, however, so it’s not going to be the bulk of this article.

No, today I’m not looking to write about corruption and grand conspiracy theories (though I love them, ask me about aliens or mud floods some time) or people missing forests for trees; rather I want to talk about something FAR more simple yet more wide reaching – the inherent problems with nutritional research that are, unfortunately, largely unavoidable. To begin I’d like to refresh you on the hierarchy of research usefulness, but before I can do THAT we need to discuss what the point of science is in the first place.

The scientific method is this: Come up with an idea (a hypothesis) and then try to find evidence either for or against it, with most of your time being spent trying to prove it wrong. If it can’t be proven wrong, it’s accepted as being potentially true.

This is a good system for a couple reasons:

- If you set out to prove something right that’s not all that hard to do. This is because you can set up the parameters of your experiment in such a way as to get the result that you wanted anyway. If I wanted to see if a certain supplement helps you lose fat, all I would need to do is get some people, put them in a calorie deficit, give them my supplement, and see if they lose fat…which they will. If I was trying to prove my theory wrong, however, I would have three groups, all on the same basic diet:

- One taking my supplement

- One taking something they think is my supplement (this controls for the placebo effect…another thing for another day)

- One not taking anything, the control group

I’d then compare results between all three to see if the group taking my supplement did better than the control and placebo groups.

A key thing here to keep in mind for the rest of this piece is that this is controlled – the people all ate the same basic diet. Without this level of control, it would be impossible to know if any variation between people was due to the supplement or due to the differences between what they were eating.

This ‘other stuff’ is referred to as a list of confounding variables – things that are not the thing you are testing, but which may affect what the outcome of an experiment is. If, for example, I was to try to find out whether broccoli improved reaction time I would need to be sure that my participants all had similar reaction times before the experiment to control for outliers, then I’d need to make sure that on the day of the trial half of them hadn’t been awake on a bender for 3 days and the others hadn’t just slammed 2 cans of Monster Energy. On a more serious note if it was a visual trial I would need to ensure that all participants either had perfect vision or were using glasses/contacts as required.

Similarly, in diet studies looking at, for example, the effect of certain foods on bodyweight it’s critical to control for things like total calories – sounds obvious, but it’s not often done. In many studies looking at low carb diets, for example, protein intake isn’t controlled and because low carb diets tend to be higher in protein it’s never clear whether any result is because of what you cut out or what you replace it with.

- It’s good because things are only ever potentially true, or often stated as being supported by evidence. Scientists hedge their bets because something can ALWAYS come along to disprove something, and an honest researcher will change the story as soon as the science dictates it. If tomorrow we found a weird cave in the Guatemalan jungle where things fall upwards rather than downwards the entire theory of gravity would either be scrapped or radically changed

- On a similar note, it strongly limits the confidence that we have in certain ideas. If you run a trial and it supports your idea, but it was only in 9 people, then while you can say your idea is supported by the evidence, you also need to note that the evidence is pretty scarce and so right now you don’t know

- And finally, it illustrates the limitations of our experience. Just because something happens and then something else happens that doesn’t mean one caused the other, or even that they are related at all. In order to claim that they are related you need to control the parameters a lot more

Every day a cockerel crows then the sun comes up, so I think the cockerel causes the sunrise.

To test this, I would need to control the parameters. One morning I would need to leave things as they are, then the next morning I’d need EVERYTHING to be the same, but I’d hide the cockerel inside, so he doesn’t crow.

If the sun comes up anyway, I was wrong.

Similarly if I believe a certain food causes stomach cramps it wouldn’t be enough to just say that’s my experience, I would need to test it.

I could, for example, eat the exact same diet for 8 days, the first 4 containing that food and the remaining days not containing it. Only by doing this a number of times would I really know.

And if I wanted to be able to say that the food caused the same symptoms for everyone, I’d need to do the same trial with hundreds of other people

The scientific method is a philosophy of objectivism – it doesn’t matter what is correlated with what; what does the controlled evidence say? And with that in the bag, we can look to the hierarchy.

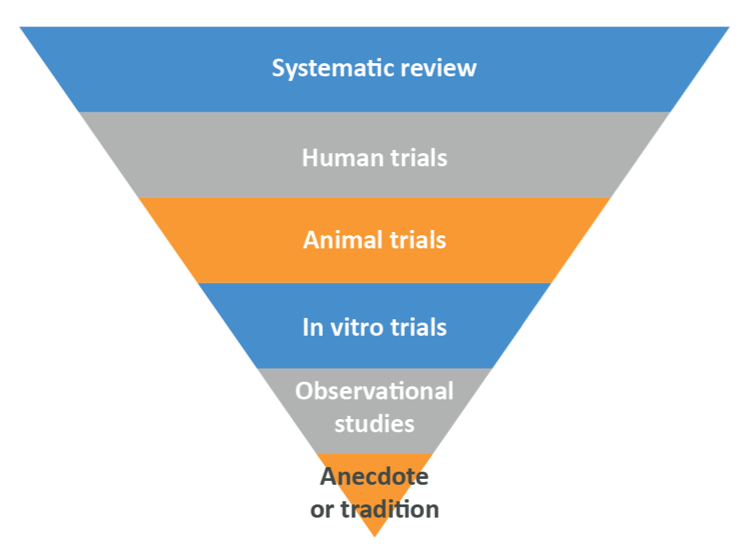

There is a lot to say about this, but to keep things brief we’ll look at each of the layers real quick, then I’ll get into what I need to say. Starting at the bottom, the research that produces the least trustworthy results.

Anecdote and tradition. “It works for me” or “That’s what we’ve always done”. Useful because of course if it’s your personal experience, you HAVE noticed certain results from your endeavours and so can continue with somewhat reliable results. Problem is you don’t actually know why the results occurred (because of the thing, only part of the thing, or something else) and you also don’t know if others will experience the same. This kind of evidence is useless for anything other than what you want to do with your own life, and even then, it’s far from the best option

Observational Trials. Uncontrolled studies where researchers will spot patterns. They may be case control studies, where a bunch of people with a problem and a bunch of people without it are asked about their eating habits (or other things in other contexts), then the data are analysed to see whether those with the problem are or are not more often exposed to something

Or they may be cohort studies where a bunch of people without a problem are asked what they eat, then followed for a period of time. Researchers will then see if those who do something have a certain problem more often than those who don’t.

- In vitro trials: Tests done in test tubes or petri dishes on isolated cells or similar

- Animal trials: Studies on animals. Cheap and easy to control

- Human trials: Studies on people. More expensive, and much harder to control

- Systematic reviews: Researchers will gather a ton of other studies using really strict criteria, then attempt to find out what the whole body of literature says. Remember earlier when I said one study on 9 people isn’t much use? It is if you have 10 of them and they all say the same thing…

These are incredibly useful, but they are only as good as the data they can include, and so rely on the rest of the pyramid to exist.

As you go up the pyramid the reliability of the results increases both because you’re going from nonhuman things to humans and so the biology starts to line up with what we want, but along with this comes the issue that control becomes harder.

Observational trials aren’t controlled at all – sure there’s some mathematical wizardry you can do to make the results a bit more reliable, but control just isn’t there. In vitro trials are extremely easy to control because you can do whatever you want to do to some cells, but cells on their own act differently to cells in complex living things. Animal trials can be controlled closely because – and whether this is moral or not is a little beyond the point here – you can do more or less what you like to an animal and an ethics committee won’t complain. Yes, you have to treat your animals well by giving them proper shelter and enrichment/toys to play with, but you have them in a cage and you literally control what food they have access to.

Human trials are a different story, and here’s the rub. Most nutrition research isn’t done on humans in well controlled conditions, and for a pretty simple reason: controlling human nutritional trials is extremely difficult. As mentioned above in order to do a really good trial, a fair test, you need to do a few things, and nutrition trials make all of them harder:

- You need to make sure that everyone in the group being studied does the same thing, but people are notoriously difficult to get to stick to a diet (if people could easily control what they ate then nobody would need a nutrition coach to help them lose weight…) and so you can’t REALLY control their intake. Sure, you can ask people to eat a certain diet and send them off with instructions, but who the hell knows if they stick to it?

- You need a control group who do everything the same barring the diet, but the problem is that people eat really varied diets so any control group will be eating a different diet to each other, or will be eating a diet they wouldn’t usually eat

- You need a placebo group, but you can’t make someone think they ate something when they didn’t

This means that most human research comes in two different forms: Observational trials where high degrees of control are basically impossible, and poorly controlled, self report trials where researchers depend on the participants to accurately tell them what they’ve been eating in between visits to the clinic.

There is a way to control human trials really well: you keep people in a metabolic ward, which is similar to a hospital ward in many ways – where you can control their food intake 100% and objectively measure what’s going on, but those trials are exorbitantly expensive and so aren’t used all that often. Not only that, but you can only realistically use a metabolic ward trial for things that can be tested short-term.

One really good example is the famous NuSi experiment run by Dr.Kevin Hall which, in extremely well controlled conditions, showed definitively that the rate of fat loss in his overweight participants was identical whether they were on a ketogenic or high sugar diet provided protein was matched (3). This kind of trial is all well and good, but it would not be possible to, for example, test the effect of certain kinds of foods on cardiovascular disease in this manner thanks to the fact that the effects would take decades to show up. You can look at the short term effects of food and infer the long term effects, but this is prone to error, and so that’s where observational trials come in.

The majority of the nutritional research on which headlines you read (and indeed a lot of public policy) are based is observational in nature, and to demonstrate we can look at a 2019 paper entitled Diet and colorectal cancer in UK Biobank: a prospective study, which you can read in full here.

The purpose of this study was to discover a connection between read meat consumption and colorectal cancer, so the researchers interviewed a lot of people (nearly half a million!) about their dietary habits, then followed them for an average of around 6 years to assess rates of colorectal cancer in the cohort. Of course, people change their diet over time, so participants were asked to fill out an online questionnaire a few years down the line to reassess intake, and roughly 30% did.

After the trial period the data were collected, and rates of cancer were compared between those of different meat intakes, discovering that more portions per week = more risk of cancer. This was reported in news outlets as another study showing that red meat causes cancer.

But there are some problems with this, namely:

- This study relies 100% on the accurate memory and honesty of participants. Even if we assume that you’re honest, are you really able to remember what you ate for lunch – including portion size – more than a day or two ago? Now factor in that most people aren’t as food-conscious as you’re likely to be…

- While the researchers did some statistical wizardry to account for various different confounding variables (other stuff such as smoking status that could account for the elevated cancer risk), in their final analysis they didn’t account for total calorie intake or fruit and vegetable intake. Indeed, they also noted that F&V intake tended to decrease as meat intake increased, which is interesting as F&V intake is associated, even in this paper, with reduced colorectal cancer risk

- Only some of the participants did the second response, so we have literally no idea what the other people were eating for the whole time the experiment ran. Do you eat the same diet now as you did 6 years ago?

And it gets worse. Even if we assume their data is reliable (which I hope I’ve at least strongly suggested it isn’t), what of their actual results? At worst, when accounting for a number of potentially confounding variables (not including calorie or plant intake) the Hazard Ratio for red AND processed meat consumption was 1.17, meaning that consumers were 17% more likely to get cancer in their entire lifetime (reflective of other research in the area).

Considering the background risk (the risk you have in general) for colorectal cancer in the UK is 7% for men and 6% for women born after 1960 (4), this means that the risk goes to 8.19 and 7.02% respectively. This is an absolutely minuscule difference, to the point that many in the scientific community have stated that the association is ‘Weak’ (5). Note that smoking 15-24 cigarettes per day is associated with an increase in lung cancer risk of over 2500% (6) for an idea of what a strong association looks like.

In fact, thanks to the sheer number of confounding factors, issues with the supporting animal and in vitro trials, and the tiny association between meat consumption and cancer amongst other issues a critical review of all epidemiological evidence up to 2015 (7) included the scathing sentence “In conclusion, the state of the epidemiologic science on red meat consumption and CRC is best described in terms of weak associations, heterogeneity, an inability to disentangle effects from other dietary and lifestyle factors, lack of a clear dose-response effect, and weakening evidence over time”.

Now people who defend this kind of work will state that observational trials aren’t SUPPOSED to be used to draw conclusions. Instead they’re just there to generate hypotheses to be later tested in randomised controlled trials, which would be a good argument if that’s how the world worked, but it isn’t. P.Cole wrote as early as 1993 that “…nearly all of the hypotheses that are said to be generated by a study are unworthy of the name: they are seen only once, they are usually weak, and many are contradicted by data…” (8). This data contradiction is a really important thing – observational studies will find a pattern, this is reported in the media, then when later controlled trials are done to test the same thing they rarely match up.

For example, while the association between red meat and cancer is pretty regularly seen in the observational literature, the authors of a rarely-spoken-about 2017 systematic review of experimental studies concluded “there is currently insufficient evidence to confirm a mechanistic link between the intake of red meat as part of a healthy dietary pattern and colorectal cancer risk” (9).

This is a major issue because, truthfully, this is typically ignored by policymakers and public health professionals who act on observational data alone in many cases – red meat being one of them.

So what can YOU as a practitioner do about this?

There are a few things you can keep an eye on, ways you can read into research and methods of ensuring that anything you read is or is not correct, but for now we would advise this to any budding practitioner:

- Find out what kind of study you’re reading, or you’re reading about in a press release. If it’s an observational trial, just ignore it. Straight up, just ignore it – at BEST it can propose a hypothesis, but that’s it, and you don’t need more questions you need answers.

- Look to see if it was done on humans or animals. Animal trials are interesting and there are situations where they’re appropriate – for example rodents are good models for testing muscle protein synthesis stimulation – but in pretty much all cases animal research doesn’t translate to humans so if it’s in rats, nematodes, fruit flies or rabbits then ignore it.

- If it’s in humans, look to see how the nutrition was controlled. If participants were asked to do something then sent away to act on it and report back, be cautious. You may need to find a few other papers that find the same conclusions before trusting the data. Similarly look to see not only what is being tested, but what is being missed – for example if one group is asked to eat a low fat diet and the other a high fat diet don’t just look at that, questions need to be asked about the foods chosen, and the foods missed out (is one group eating less plant matter?). If this isn’t reported, that leaves an unanswered question about the conclusions

- And, in short, wherever possible try to read systematic reviews of randomised controlled study data. These are a little harder to track down, but you’ll save a TON of time by reading these and not the individual papers. Sure, to be a true scientist you need to be reading primary research, but you’re not, you’re a coach, and so review papers are a damn good place to start

Or, if you don’t have time for that, consider looking at an evidence-based nutrition course that can summarise the data for you while being transparent about where their data comes from, in order to let you check all the figures you’re being presented. The BTN Advanced Nutrition Coaching Course – Active IQ Level 4 is such a course, with students leaving us more educated, more confident, more competent, and able to go on to further critically analyse information/research for themselves in the future.

They will also receive a level 4 qualification, awarded by Active IQ, enabling our students to call themselves qualified nutrition coaches unlike almost anyone else in the UK health and fitness scene.

If you’d like to join us on October 28th, hit this link to sign up HERE

And if you’d like to chat to me (Tom) before then, my booking link is HERE

I look forward to talking to you.

Thanks for reading.

References

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5099084/

- https://science.sciencemag.org/content/359/6377/747

- https://academic.oup.com/ajcn/article/104/2/324/4564649

- https://www.cancerresearchuk.org/health-professional/cancer-statistics/statistics-by-cancer-type/bowel-cancer/risk-factors#heading-Zero

- https://www.ncbi.nlm.nih.gov/pubmed/20663065

- https://www.cancerresearchuk.org/health-professional/cancer-statistics/statistics-by-cancer-type/lung-cancer/risk-factors#heading-Two

- https://www.ncbi.nlm.nih.gov/pubmed/25941850

- Cole P. The Hypothesis Generating Machine. Epidemiology. 1993;4(3):271.

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5407540/

- https://www.ncbi.nlm.nih.gov/pubmed/31555799?fbclid=IwAR07Uk8NH9NEt0_kA_NljMF70u9snhxO5Y06Ivlvi0apWLMAXxWqkZpYkCo